All In One Digital Human Video Generator

Create your AI Avatar with just an image, a voice clip, and a video!

All In One Digital Human Video Generator

Create your AI Avatar with just an image, a voice clip, and a video!

Alibaba Digital Human Model: OmniAvatar

OmniAvatar: Innovating Full-Body Video Generation Driven by Audio

In the realm of virtual humans and digital content creation, the technology for full-body video generation driven by audio is rapidly evolving. OmniAvatar, jointly introduced by Zhejiang University and Alibaba, represents the latest breakthrough in this field. OmniAvatar generates full-body virtual human videos from audio input, addressing issues such as rigid movements and insufficient lip-sync precision in existing technologies.

Core Features and Technology

High-Precision Lip-Sync and Full-Body Motion Generation

OmniAvatar introduces a pixel-wise multi-layer audio embedding strategy, ensuring high synchronization between lip movements and audio while generating natural and fluid full-body motions. This technology makes the generated virtual human videos more realistic and vivid.

Multimodal Input and Fine-Grained Control

OmniAvatar supports precise control through text prompts, such as specifying the character's emotions and actions. Users can control the virtual human's emotions and actions through simple text descriptions like "a sorrowful monologue" or "an impassioned speech."

Dynamic Interaction and Scene Adaptation

OmniAvatar not only supports the generation of natural full-body motions but also enables interaction between the virtual human and surrounding objects, as well as dynamic background adjustments. For example, the virtual human can pick up a microphone and sing according to text prompts, or interact in different backgrounds.

Application Scenarios

Podcasts and Interview Videos

Using a single host photo and audio, OmniAvatar can automatically generate vivid host videos, suitable for podcasts and interview programs.

E-commerce Marketing Ads

OmniAvatar supports natural interaction between characters and objects, suitable for product display. For example, a virtual human can be generated to showcase products through text prompts, enhancing the appeal of advertisements.

Virtual Singer Performances

OmniAvatar excels in singing scenarios, generating precise lip-sync and natural body movements to create realistic stage performances.

Dynamic Scene Control

OmniAvatar supports dynamic background changes controlled by text prompts, such as generating a virtual human in a moving car.

Technical Principles

Pixel-Wise Multi-Layer Audio Embedding

OmniAvatar employs a pixel-wise multi-layer audio embedding strategy, aligning audio waveform features with video frames at the pixel level to significantly improve lip-sync precision.

LoRA-Based Training Method

OmniAvatar introduces a LoRA-based training method in the layers of the DiT model, retaining the base model's powerful capabilities while flexibly incorporating audio conditions.

Frame Overlapping Mechanism and Reference Image Embedding

To maintain consistency and temporal continuity in long video generation, OmniAvatar incorporates a frame overlapping mechanism and reference image embedding strategy.

Advantages and Limitations

Advantages

- Natural and Fluid Motion:OmniAvatar excels in generating natural and expressive character portraits with fluid motions.

- High-Precision Control:Through text prompts and LoRA training methods, precise control over virtual human actions and expressions is achieved.

- Wide Range of Applications:Suitable for a variety of video generation scenarios, including podcasts, human interactions, dynamic scenes, and singing.

Limitations

- Color Offset Issues:The model inherits some defects from the base model, such as color offset, which may cause color differences in the generated videos compared to real scenes.

- Error Accumulation in Long Videos:In long video generation, error accumulation may lead to a decline in video quality.

- Complex Text Control Limitations:Although text prompt control is supported, it is still difficult to distinguish between speakers or handle multi-character interactions in complex text control.

- Long Inference Time:Diffusion inference requires multiple denoising steps, resulting in a long inference time that is not suitable for real-time interaction.

Alibaba Digital Human Model: OmniTalker

OmniTalker: The Future of Real-Time Multimodal Interaction

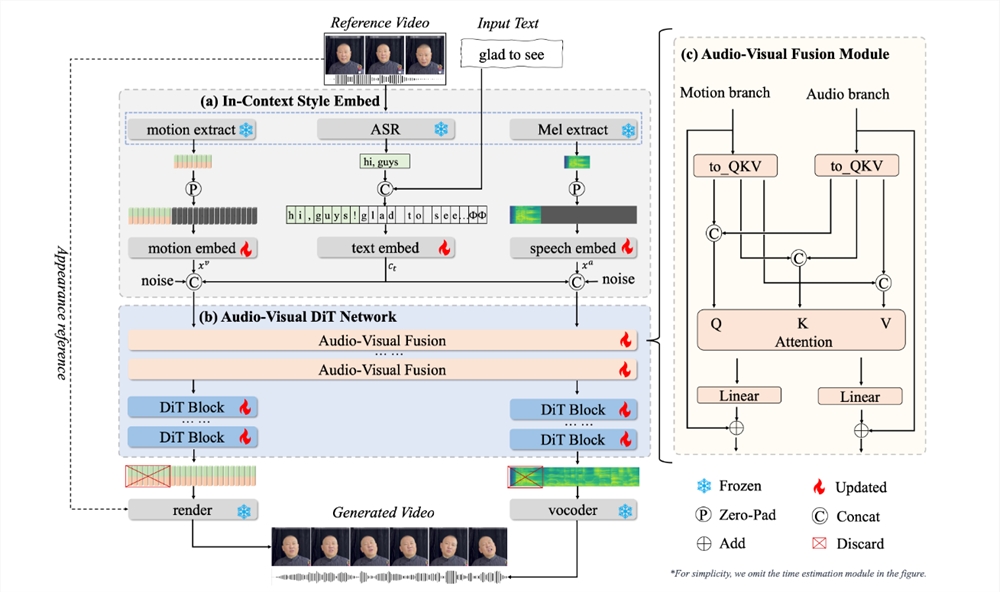

In the digital age, human-computer interaction is evolving from pure text to a more natural multimodal form (voice + video). OmniTalker, introduced by Alibaba's Tongyi Lab, is an important step in this evolution. OmniTalker is a real-time text-driven talking avatar generation framework that can convert text input into talking avatars with natural lip synchronization and supports real-time zero-shot style transfer.

Core Features and Technical Breakthroughs

Multimodal Fusion

OmniTalker supports the joint processing of four types of inputs: text, images, audio, and video, enabling the generation of richer and more natural interactive content.

Real-Time Interaction

The real-time capability of OmniTalker is one of its major highlights. With a model size of only 0.8B parameters and an inference speed of up to 25 frames per second, it can support real-time interactive scenarios such as AI video assistants and real-time virtual anchors.

Precise Synchronization

OmniTalker employs TMRoPE (Time-aligned Multimodal Rotary Position Embedding) technology to control the audio-video alignment error within ±40ms, ensuring high-precision temporal alignment of audio and video.

Zero-Shot Style Transfer

OmniTalker can simultaneously extract voice and facial styles from a single reference video without the need for additional training or style extraction modules, achieving zero-shot style transfer.

Application Scenarios

The application scenarios for OmniTalker are extensive, including but not limited to:

Virtual Assistants

OmniTalker can be integrated into conversational systems to support real-time virtual avatar dialogue.

Video Chats

In video chats, OmniTalker can generate natural lip synchronization and facial expressions.

Digital Human Generation

OmniTalker can generate digital avatars with personalized features.

Project Background and Motivation

With the development of large language models (LLMs) and generative AI, the demand for human-computer interaction to evolve from pure text to multimodal forms is increasing. Traditional text-driven talking avatar generation relies on cascaded pipelines, which have issues such as high latency, asynchronous audio and video, and inconsistent styles. OmniTalker aims to address these pain points and promote a more natural and real-time interactive experience.

Experience OmniTalker

The OmniHuman platform is set to integrate the OmniTalker model soon – stay tuned. The OmniTalker model will offer users powerful real-time text-driven talking avatar generation capabilities, supporting natural lip synchronization and real-time zero-shot style transfer. Users will be able to directly experience the powerful features of OmniTalker on the OmniHuman platform.